Building Blocks of Transformers: 2. Position Representation

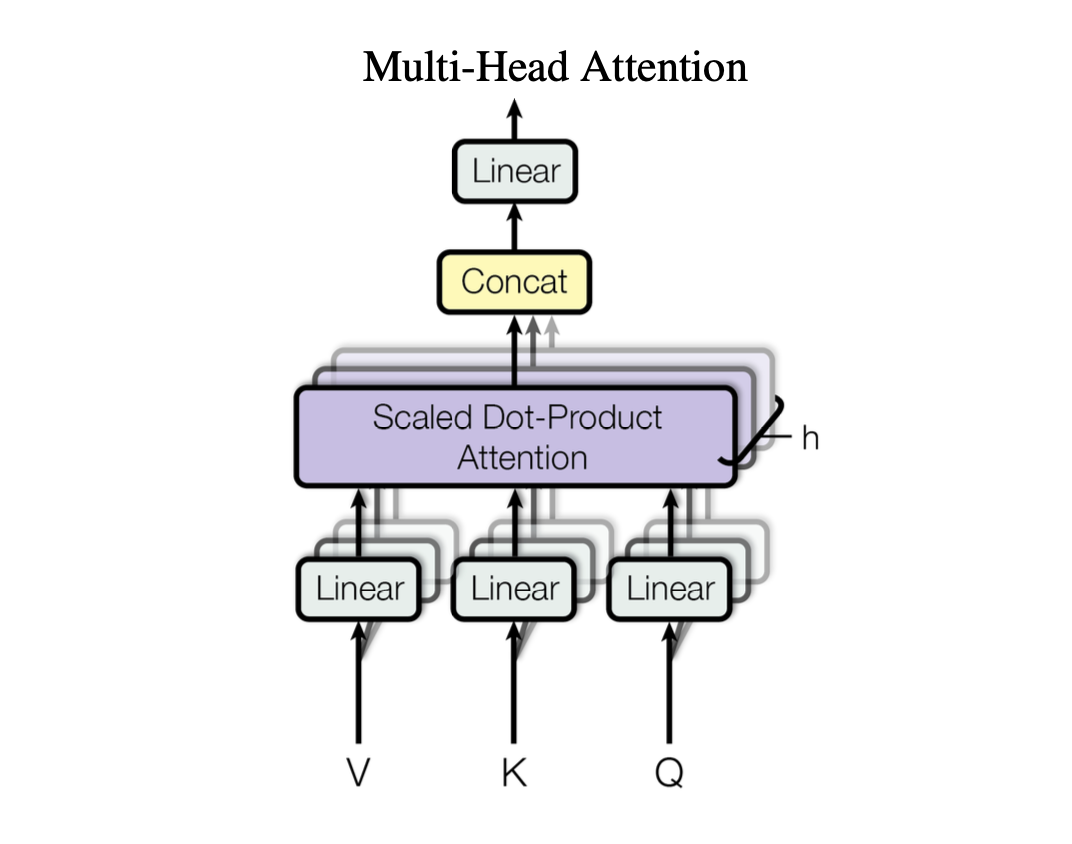

Attention

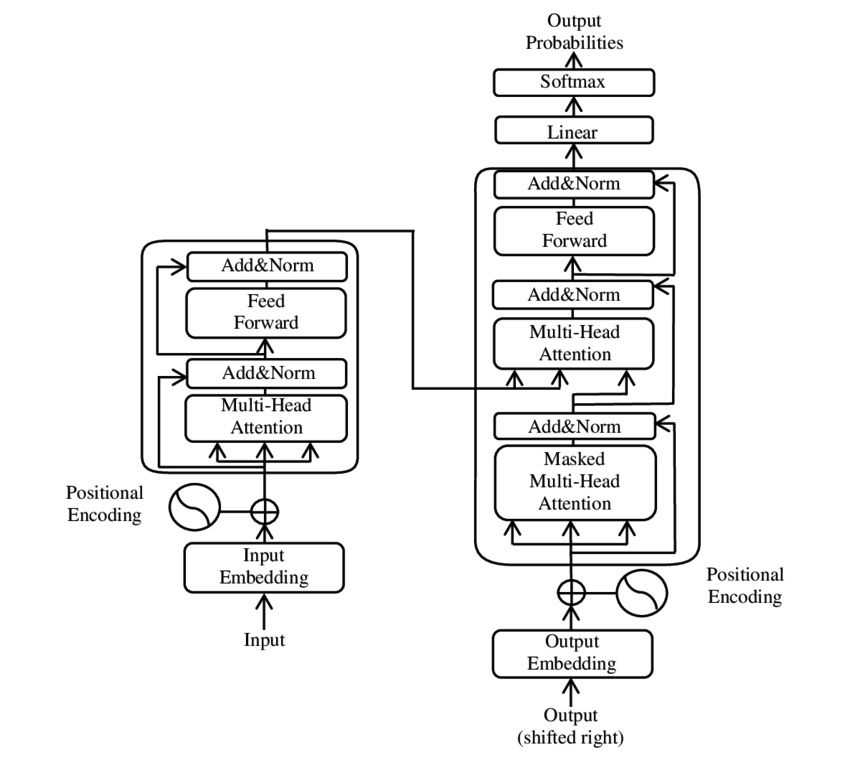

Transformers

Machine Learning

1. Positional Encoding Given the sequence He can always be seen working hard. This sequence is distinctly different from He can hardly be seen working. As can be seen slight change in the position of the word conveys a…

No matching items